In the world of containerized microservices, those microservices to be able to report it's health is quite critical. I would say it's one of the crucial things to have, so your service can serve the clients without zero downtime. Based on the health status of the services, whatever the container orchestrator you are using (Kubernetes, Azure Service Fabric, AWS ECS, etc.) can decide a lot of things. Such as whether it's required to spin up new instances to maintain the availability, should it continue rolling up updates for services, etc.

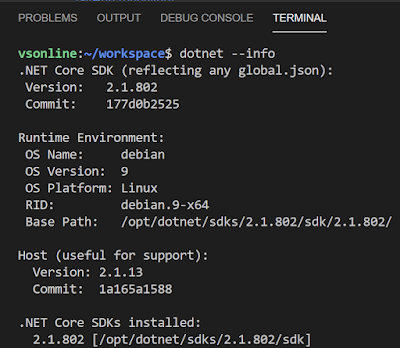

Fortunately, ASP.NET Core comes with a built-in health checks feature that was released as part of ASP .NET Core 2.2. In this post, let's see how we can set up health checks for a simple ASP.NET Core Web Application.

I have created an ASP.NET Core 3.1 Web Application, with the default template with Visual Studio.

To start with, you just need to register the required services and define the endpoint to report health.

public void ConfigureServices(IServiceCollection services)

{

// Other registrations

services.AddHealthChecks();

}

public void Configure(IApplicationBuilder app, IWebHostEnvironment env)

{

// Other configuration

app.UseEndpoints(endpoints =>

{

// Other endpoint mappings

endpoints.MapHealthChecks("/health");

});

}

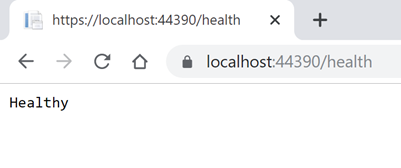

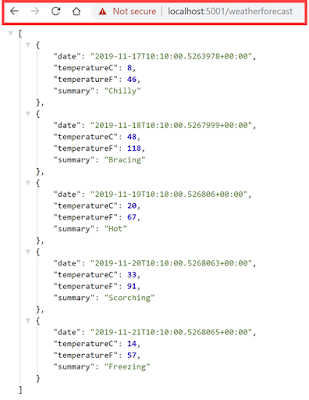

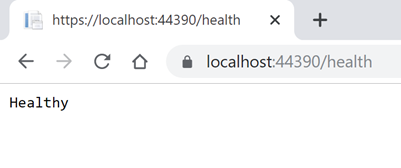

And now when you run the app and navigate to

/health endpoint, you can see it's reporting it's default status.

|

| Healthy |

Almost all the applications are depending on other services, such as a database, another service, etc. So ideally you want to have health checks for each of them. For that, you can easily extend your health check registration as below.

services.AddHealthChecks()

.AddCheck("MyCheck1", () => HealthCheckResult.Healthy("I am healthy"), new List<string> { "tag1" })

.AddCheck("MyCheck2", () => HealthCheckResult.Unhealthy("I am unhealthy"), new List<string> { "tag2" });

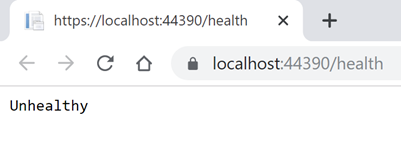

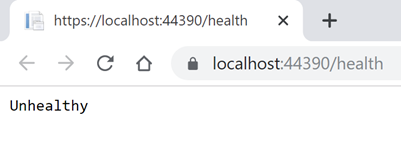

With above, our health endpoint will return Unhealthy, because one of the dependent services is Unhealthy.

|

| Unhealthy |

You have a lot of control over determining how to implement health check logic. You just need to have a class implement

IHealthCheck and implement it's

CheckHealthAsync method as below.

public class ExampleHealthCheck : IHealthCheck

{

public Task<HealthCheckResult> CheckHealthAsync(HealthCheckContext context, CancellationToken cancellationToken = default)

{

var isHealthy = true;

if (isHealthy )

{

return Task.FromResult(

HealthCheckResult.Healthy("A healthy result."));

}

return Task.FromResult(

HealthCheckResult.Unhealthy("An unhealthy result."));

}

}

And use like below.

.AddCheck<ExampleHealthCheck>("MyCheck3", null, new List<string> { "tag3" });

There is this nice package

Xabaril/AspNetCore.Diagnostics.HealthChecks which already contains health check logic for most of the providers, so you don't have to reinvent the wheel. Please do check it out.

There is a whole lot of options to customize the health check feature, please visit the following links for more details.

Health checks in ASP.NET Core

Happy Coding.

Regards,

Jaliya

![MVPLogo_thumb[2] MVPLogo_thumb[2]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgZHLf9Dzh9vDtSHl7rif7X0ibWxXt1-undQ3b2Yq8NPOgf8dAbJdTKTohctj-cBwfW6ZoQgC0EYn1n2ZlEN2pQpeZcfnxQ8Rpyev58rkq1c82K8DqrAch01qncomvk94Fye_1oZ5MEKys/?imgmax=800)